Introduction

Storage (disk) subsystem is poorly understood even by folks who work in bigdata area, where optimizing performance by mere 5% may save a fortune. Very few interviewees are able to answer questions about how data gets from application to storage device and what factors affect performance of this process. Extra complexity comes from the fact that there currently coexist two types of devices: HDD and SSD. Each of them has unique set of benefits and drawbacks. This results in terribly inefficient software designs sometimes. Hardware planning also becomes more like a game of guessing rather than logical reasoning. Over- and under- provisioning may easily happen, latter leading to project failure in worst case.

Goal of this article is to provide overall coverage of storage subsystem with main focus on performance. It is split into theoretical and practical parts. Theoretical part is dedicated to the components of IO stack with particular attention to modern data storage devices: HDD and SSD. Theory of operation provides the basis for explaining performance advantages and limitations of corresponding device; real-world test results are included as well. Practical part lists various methods of performance improvement and also gives hands-on advices about everyday tasks.

Reader is expected to have previous experience of programming and system administration in Linux environment.

Theory

IO stack overview

Let’s look at what happens when application makes IO request, such read(2).

Each time request is issued, it passes through the following layers:

First, request in the form of call to C function goes from userspace process into libc.

Server oriented Linux distributions use GNU libc (glibc), but others may use different

implementation, such as Bionic libc used in Android.

(g)libc role is to provide convenient means of interacting with kernel: it checks arguments

for invalid values, manipulates with registers to satisfy kernel calling conventions (ABI)

and sets errno on return.

Without (g)libc, software engineers would have to write architecture-dependent assembler code

to perform syscalls themselves.

Next, IO syscall, which has the same name as corresponding (g)libc function, enters kernel space and is routed to code of filesystem that is responsible for passed file descriptor. Filesystem maps hierarchical structure of directories and regular files onto linear address space of block device. Depending on syscall, filesystem may trigger a bunch of IO requests to lower levels. For example, reading portion of data from file may trigger read request and also a write request to file’s metadata to update access time.

Next level is page cache. Filesystem contents is extensively cached in RAM, so chances that requested data is found in page cache are high in typical system. If requested data is found here, it is returned immediately without propagating IO request to lower levels. Otherwise, it goes to IO scheduler, also called an elevator.

Scheduler is responsible for order in which requests are sent to storage device. Usually this order is chosen to provide fairness among all processes with respect to IO priorities. When device is ready to serve next request, scheduler pops next request from head of its queue and passes it to driver.

Drivers may be organized into multi-tiered stack for better code reuse. For example, topmost driver may be responsible for encoding IO requests into SCSI commands, while bottomost driver may implement protocol of sending these SCSI commands to specific model of disk controller. Ultimate goal of driver stack is to provide transparent means of communicating with variety of hardware controllers and interfaces.

Workstations and cheap servers have controller integrated into chipset, while more expensive servers have separate dedicated controller chip soldered to motherboard; standalone expander cards inserted into PCIe slots also exist. Controller’s task is to provide reliable delivery of commands to connected hardware and their responses back. It converts commands between software and wire representation, computes checksums, triggers hot-plug events and so on. Some controllers may have their own small cache and IO scheduler, this is particularly true about controllers capable of hardware RAID.

Ultimately, request reaches storage device. Once it completes serving it, response is sent back and also passes through all these layers from bottom to top until data and response code are delivered to userspace application. This finalizes IO operation.

Many more layers may be plugged into these IO stack, for example, by using NFS or multi-tiered RAIDs. Non-essential layers may also be removed: e.g. it is possible to bypass page cache by issuing IO request with so called “direct” flag set on it. Anyway, above stack is the core of IO subsystem of all sorts of computers ranging from smartphones to enterprise servers.

Performance metrics

As with all request-oriented systems, computer storage performance is measured by using two common metrics: throughput and response time.

Throughput comes in two flavours: either as read/write speed in megabytes per second (MB/s) or as number of completed IO operations per second (IOPS). First flavour is better known because it is reported by a variety of command line utilities and GUI programs. It is usually determinant in systems where operations are large enough, such as video streaming. Conversely, IOPS is used primarily in contexts where operations are relatively small and are independent from one another, such as retrieving rows of relational database by their primary keys. Whether it is MB/s or IOPS, throughput is a macro metric in its nature: it doesn’t say anything about each separate operation. Whether you have single large operation or a group of smaller ones, whether IO occupies 100% of application time or only fraction of it, whether identical operations take the same amount of time to complete or whether they are orders of magnitude different — all these cases may produce the same throughput value.

Using throughput as a single metric may be sufficient for offline batch processing systems. High value means good hardware utilization, while low value means waste of resources (hardware). But things become different if you are building online system performing multiple independent requests in parallel. Because you need to satisfy constraints for each separate request, response time — time spent doing single IO operation (ms) — becomes a key factor. Of course, we want it to be as small as possible.

In ideal world, response time is directly derived from throughput and request size:

.

However, there are a lot of subtleties in practice:

-

response time can never be zero because storage device needs some time to start accessing data

-

operations may influence one another, so order of operations is important

-

if multiple operations are issued simultaneously, they may end up waiting in queue

Result is that response time values are scattered along wide range even if requests are identical in type and size. As such, we need some way to combine them into single number. Most often, average response time over fixed time window is used for that purpose (“single operation completed in 15 ms on average during last minute”). Unfortunately, this naive metric is too general to reveal any significant information. Consider three different systems, each completing the same sequence of 10 operations. Lists of response time values are presented below:

(1) 25 25 25 25 25 25 25 25 25 25 (2) 45 45 45 45 45 5 5 5 5 5 (3) 205 5 5 5 5 5 5 5 5 5

All these systems have the same average response time of 25 time units, so they are completely indistinguishable. But it is obvious that some of them are preferable to the others. Which one is the best? This depends on the application. If these operations are independent (such as in file server) then I’d say that (3) is the best one: system managed to complete almost all operations very fast — in under 5 time units each; one slow operation is not a big problem. On the other hand, if these operations are part of some larger entity and are simultaneously issued (so that total time is limited by the slowest operation), then (1) is the best.

Metric that takes into consideration all such sorts of reasoning is percentile.

Actually, it is not a single metric but rather a family of metrics with given parameter .

-percentile is defined as such response time that

of operations are faster

than this value and

percent of operations are slower.

is usually selected to be 90%, 95%, 99% or 100% (last value is simply the slowest

operation in given time window).

Three above systems have 90-percentile response times of 25, 45 and 5 time units respectively.

Idea of percentile metric is that if you are satisfied with 90-percentile response time,

then you are definitely satisfied with all response times less or equal to 90-percentile.

Specific values are of no importance.

Variations are also in use.

It may be convenient to keep track of multiple percentiles simultaneously: 95, 99, 100.

Or, if there is agreed target response time value , then it makes sense to use “inverse”

of percentile — percent of operations that are slower than

.

Because all described metrics are connected together, it is not possible to optimize one without sacrifying others. Some projects define their goal as optimization of single metric, while others have a combination of constraints. Examples of such goals:

-

Web server (public service): be able to handle at least 1000 IOPS by keeping 95-percentile response time at 500 ms level. Goal here is to provide virtually instant service to almost all of 1000 users who visit site at peak hour.

-

Video projector (realtime system): be able to read each separate frame in no more than 40 ms. Goal here is to prevent buffer underflow so that video sequence is not interrupted (assuming 25 frames per second).

-

Backup storage (batch system): provide uninterruptible read speed (throughput) of at least 70 MB/s. Required so that typical backup image of 250 GB can be restored in one hour in case of critical data loss.

Access patterns

What most people fail to understand is that throughput figure (MB/s) given in device specification will virtually never be achieved. The reason for that comes out from the fact that response time of each single request is limited by access time — extra price that must be paid for each single request, no matter how small it is. General formula for computing response time of single request is following:

Access time is a combination of all sorts of delays not dependent on request size:

-

Each request must pass through number of software layers before it is sent to device: userspace → kernel → scheduler → driver(s). Response also passes these layers but in reverse direction.

-

Transfer by wire also has some latency, which is particularly large for remote NASes.

-

Finally, device may need to make some preparations after receiving request and before it will be able to stream data. This factor is the major contributor when HDDs are used: HDD must perform mechanical seek, which takes order of milliseconds.

But once random access price is paid, data comes out with fixed rate limited only by throughput of device and connecting interface. Chart below displays relation between request size and effective throughput for three hypothetical devices with the same designed throughput (200 MB/s) but different access times. Even to get 50% of efficiency (100 MB/s), someone needs to make requests of 32 KiB, 256 KiB and 2 MiB respectively. Issuing small requests is very inefficient, this can be easily observed when copying directory containing large number of small files. Consider following analogy: it is unfeasible to enter a store in order to purchase bottle of beer for $1 if entrance fee is $100.

Such sigmoidal behaviour led to idea of differentiaing access patterns into two classes: sequential and random. Most of real world applications generate IO requests belonging to strictly one of these two classes.

- Sequential access

-

Sequential access arises when requests are large enough so that access time plays truly little role; response time is dominated by time it takes to transfer data from storage media to application or vice versa. Throughput (MB/s) is the main metric for such type of pattern. This is optimal mode of operation for storage devices — throughput efficiency is somewhere in the right part of above graph and is close to 100%. All following tasks cause sequential access:

-

making a copy of a large file (in contrast to copying a lot of small files)

-

making backup of a raw block device or a partition

-

loading database index into memory

-

running web server to stream HD-quality movies

-

- Random access

-

Random access is the opposite of sequential access. Requests are so small that second summand of the above formula — access time — plays primary role. IOPS and response time percentile metrics become more important. Random access is less efficient but is inevitable in wide range of applications, such as:

-

making copies of large number of small files (in contrast to making a copy of single large file)

-

issuing SQL SELECTs, each one returning single row by its primary key

-

running web server to provide access to small static files: scripts, stylesheets, thumbnails

-

It should be obvious that some operations are not purely sequential and are not purely random. Middle of the graph is exactly the place where both random and sequential components make significant contribution to response time. Neither of them may be ignored. Such access pattern may be a result of careful request size selection to keep balance between response time and throughput, for example in multiclient server environments.

Performance-related concepts

Concepts presented below are not exclusive for mass storage devices, but are the same for all types of memories found at all levels of application stack, ranging from DRAM chips to high-level pieces of software such as distributed key-value databases as a whole. These concepts are implemented at least twice in respect to storage stack:

-

in OS kernel

-

in intermediate electronics, such as port expanders and hardware RAID controllers (if present)

-

in storage device itself

Caching

Storage devices are very slow compared to other types of memory. Look at the image below, it visualizes memory hierarchy found in modern computers. When moving from top to bottom, memory sizes grow in capacity, but tradeoff is more expensive access time. Largest drop occurs between storage devices and RAM: it is order of 1,000 for SSD and 10,000 for HDD. This should not come as a surprise because storage device is the only one in this hierarchy capable of persistency (ability to store data without power for prolonged amount of time), which doesn’t come for free.

If we collect statistics about accesses to storage, then we may see that only small fraction of data is accessed often, while large bulk of remaining data is virtually never accessed. Actual distribution varies depending on purpose of application, but the general concept of dividing data into “hot” and “cold” proves to be correct for vast majority of applications.

This observation leads to idea of caching storage data — making copy of “hot” data in much faster RAM. Caching reduces access times to nearly zero for “hot” data and it also helps to increase throughput but to a lesser extent. Caching is so natural and effective that it is implemented in all nodes on the path from storage device to user application:

-

storage devices have internal caches

-

hardware RAID controllers and port expanders may have caches

-

operating system maintains disk cache in RAM

-

applications may implement their own domain specific caches

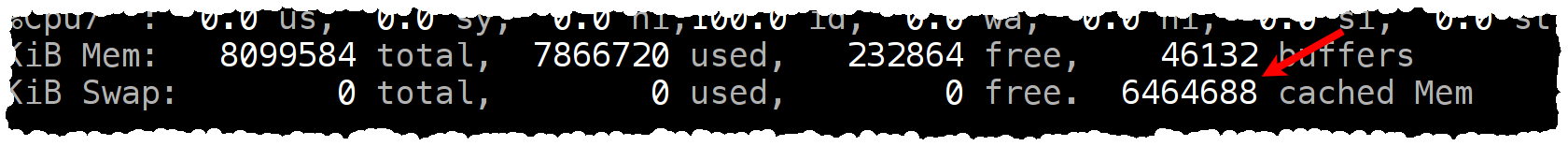

Usually disk cache found in OS is the most important cache because it is the largest one. Hardware cache sizes are fixed during production and rarely exceed 1 GB, while disk cache is limited only by available RAM, which is much easier to expand. Operating system is free to use all RAM not directly requested by applications to transparently maintain cache. It is not uncommon to see disk caches of hundreds of gigabytes. This even led to software architercture pattern when application depends on the fact that all data is cached as part of normal workflow and application would fail to meet performance goals otherwise.

Speculative reads

Idea of speculative reads is to anticipate read requests from upper layer.

When requests are scattered randomly all over the storage address space, no prediction is possible.

But when order of previously accessed addresses is regular, it is easy to make prediction on what

addresses will be accessed next.

Set of recognized patterns varies between different types of memory and implementations.

In data storaging, almost always read-ahead is implemented: it recognizes basic pattern when

addresses are accessed in sequence in ascending order.

For example, if data from addresses

was requested,

then read-ahead may decide to speculatively read data from locations

… into cache.

Conversely, speculative read of addresses which are accessed in descending order is called read-behind.

Read-ahead and read-behind are typically implemented in all layers of storage stack where there is cache present. As with caching, most notable implementation is found in OS. OS has much more information compared to hardware: it is able to relate requested addresses with files, it knows exactly which process issued request and its priority. As such, mechanism of speculative reads fits naturally into operating system’s IO scheduler. Being a software implementation, it is also very flexible: it allows to modify settings for each separate file programmatically. Software engineer may wish to increase volume of data read in advance for some file or, vice versa, to entirely disable speculative reads. Storage devices also have read-ahead implementation but it is limited to just reading couple of hundreds kilobytes of further located data (regarding last request) if device becomes idle. This may help in embedded systems without sophisticated IO scheduler.

Speculative reads may be executed online or in background. In the first case, initial request size is extended by read-ahead/read-behind value. For example, if you issue request to read 100 KB then it may be transparently extended to 250 KB: first 100 KB is returned to you as was requested, while remaining part is stored in cache. This takes a bit longer to complete but still comes out beneficial for devices with long access time, such as HDDs. Background speculative reads work similar. The only difference is that they are done by additional requests, which are issued in background, when there are no requests of higher priority to serve.

Writeback

Reads from memory are always synchronous: you can’t return control to requesting application

without actual data.

When application asks OS kernel to read some piece of data, application is blocked while

data is physically being fetched from storage or from cache.

But writing is another story.

Think of write as of delayed request.

Operating system does not interact with storage during write() system calls by default.

Instead, it makes a copy of user supplied data into kernel buffer and returns control

to application immediately.

Copying to RAM is very fast compared to access to storage and has to be done anyway

because hardware (DMA) requires that buffer has specific alignment and location in physical memory.

Actual write happens at some point later in background (typically in no more than in couple of seconds

after requested), concurrently with unblocked application.

Hence, the terms writeback and write buffering.

Such approach reduces running time to 50% in best case for applications which would be otherwise

active for one half of time and blocked by write IO for another half.

Without buffered writes, software engineers would have to use multiple threads or nonblocking IO

explicitly to achieve the same result, which is longer to code and harder to debug.

Problem with writeback is that if something goes wrong — for example, power is lost — then buffered but not yet executed writes are also lost. In order to resolve this issue, operating systems provide means of explicit synchronization. These are presented by system calls of different granularity levels which block application execution until all previously issued writes are actually executed.

It is important to remember that writes and reads are very different — they are by no means symmetric.

In particular, if write() of 5 GB of data seems to complete in one second, it doesn’t mean that

your disk has speed of 5 GB/s.

Data is not written yet at this moment, but only buffered in kernel.

Write combining and reordering

Buffering of writes is beneficial to performance not only because of increased concurrency, but it also makes possible for OS to make device-dependent optimizations, which are transparent to user application.

First optimization is write combining. Its idea is that applications tend to use small buffers to write large sequential chunks of data. Instead of issuing each small request to storage device separately, OS groups them into larger requests. This results in that access time cost needs to be paid much rarely on average than it would be paid otherwise.

Another optimization is write reordering.

Whether and how it is used depends on type of storage device.

For example, access time for hard disk drive depends on absolute delta between previously accessed

address and currently accessed address among other things.

If three requests come in sequence to addresses

then it is

beneficial to reorder them to

.

This reduces cumulative delta from

to

.

Write reording creates problem of write hazards. Applications may depend on strict ordering of request execution to guarantee logical consistency of data. For example, consider two applications — one producer and one consumer — which communicate by means of filesystem. When producer application wants to send some data, it makes two requests: first it writes new data and then writes pointer to new data into predefined location. If order of requests is reversed by OS, then it is possible that consumer application reads new pointer value first. It makes further read by dereferencing this pointer and sees some garbage because actual data has not been written yet. Applications which depend on strong ordering must explicitly enforce it by using synchronization system calls. Issuing such syscall between two writes would remove write hazard in above example.

Read-modify-write

Memory devices rarely allow to access single bits and even bytes. Such limitation is the result of internal device organization and also simplifies protocols and ECC handling. Minimal unit for mass storage devices is sector, which is 512 or 4096 bytes depending on device model. When OS sends request to device, it specifies index of first sector, number of sectors to read/write and pointer to data buffer. Devices do not have any API to access sectors partially.

Such strong limitation is not convenient for user applications, so OS transparently transforms arbitrary byte-aligned user requests into larger sector-aligned requests. This doesn’t create substantial performance problems for reads: access time cost must be paid anyway, and it is much larger than time required to read extra bytes.

Situation with writes is much worse than that. When application makes write request with address or size not aligned by sector size, OS performs transparently infamous read-modify-write sequence (RMW for short):

-

first, sectors which must be written only partially, are read (#5 and #9 in image below)

-

then data from these sectors which needs to be preserved is masked and merged into main buffer

-

and only then all affected sectors are written to storage device (#5, #6, #7, #8, #9)

It doesn’t seem to create a performance degradation at first glance because only first and last sectors may be written partially, no matter how large request is. Inner sectors are always written in full and, thus, don’t need to be read first. But the problem is that above steps can’t be performed simultaneously — only step by step. This results in that effective write response time becomes poor, it may be twice as long compared to read response time in worst case.

If write request is issued exactly by sector boundaries, then RMW doesn’t happen and there is no performance penalty. Note that high-level hardware (RAID arrays) and software (OS cache and filesystem) may increase minimal RMW-avoidable unit from sector size to even higher value.

Parallelism

Most of elementary memory devices are able to serve only single request at a time and demonstrate poor sequential speed. Combining multiple such devices together results in better performance. This technique is commonly employed in all types of memory:

-

SSDs are equipped with 4-16 flash chips, each chip has 2-8 logical units (LUNs)

-

DRAM modules contain 8-16 DRAM chips per rank

-

DRAM controller has 2 channels

-

RAID arrays are built atop of 2-8 slave devices

Such organization is beneficial to performance of both sequential and random access patterns.

Sequential access is faster due to interleaving. Idea is to organize addressing in such way that parts of single large request are served simultaneously by different elementary memory devices. If these parts turn out to be identical in size, then effective sequential speed is multiplied by number of attached elementary devices. The more devices are connected together, the faster cumulative sequential speed is.

Random access benefits from the fact that large number of small requests may be served in truly parallel way provided they are evenly distributed across elementary devices. Without such organization, requests would have to wait in queue for prolonged periods of time, thus worsen response time from software perspective.

Good thing about parallelism is that its scalability is virtually infinite: by combining more and more elementary memory devices together it is possible to achieve hundreds and thousands times faster sequential speed or to be able to serve hundreds and thousands simultaneous requests in truly parallel way. Bad thing is that access time can’t be lowered by such technique. Even if you managed to get x1000 improvement in throughput, response time of small requests is still the same as if only single elementary device was present. Access time and, as a result, overall response time of standalone request can be improved only by switching to faster technology.

Hard disk drive (HDD)

Hard disk drives first appeared in 1950s. Rapid advancement in technology led to increase in capacity by orders of magnitude, so that modern HDD is able to store up to 10 TB of data with price as low as 2.5 cents per gigabyte. Serious disadvantage of hard disk drives is that their operation relies on mechanical principles. Actually, HDD is the last of devices in computer which is not based on purely electronical or eletrical phenomena (cooling fans are not taken into account because they are not involved in processing of data). As a result, hard disk drives have following disadvantages:

-

HDDs are prone to mechanical failure. Vibrations or elevated temperatures found in highly packed server rooms make HDDs to fail after only couple of years of service.

-

Precise mechanical operation requires using heavy metallic components — it’s impossible to use HDDs in mobile devices. Typical 3.5" HDD weights about 0.7 kg.

-

And, most important, very long access time, which didn’t improve greatly since the advent of HDDs. This is primary reason why HDDs are steadily being obsoleted by SSDs.

However, HDD’s killer feature — very low price per gigabyte — makes them number one choice in applications where price is more important than performance, such as multimedia storages, batch processing systems and backuping.

Theory of operation

Internals

HDD stores information in magnetic form on the surface of platter. It is covered with thin layer of ferromagnetic material and is perfectly polished. While platter surface seems like a single entity from macroscopic point of view, it is divided logically into very small magnetic regions (~30x30 nm). Each such magnetic region has its own direction of magnetization, which is used to encode either “0” or “1”. Direction of magnetization may be changed locally during write operation, which is performed by applying strong external magnetic field exactly to the target region. Each of regions is also may be read by passing coil atop of it: direction of current generated by electormagnetic induction depends on direction of region magnetization. Read and write operations are perfected to such extent, that each of them takes only couple of nanoseconds to act on each separate magnetic region. This translates to speeds of more than 100 MB/sec.

To increase HDD capacity, each platter has two magnetic surfaces, and a group of identical platters is mounted together to form platter assembly. Maximal number of platters in assembly depends on disk form factor and height: 3.5" disks may have 1-7 platters, while 2.5" disks — only 1-3. Thus, 3.5" disk may have maximum of 14 surfaces. Number of platters is rarely specified in datasheets, so you may want to consult The HDD Platter Capacity Database. This resource was created by enthusiast with sole purpose to provide means of getting info about platter configuration without need to disassemble HDD. You may note that some drives have weird setups. For example, it is common for one of platters to have only single functional surface in a two-platter HDD (total of 3 surfaces). Another example is HDD where area of some surfaces is used only partially. Usually such setups are created in order to fulfil marketing demand for full range of capacities: instead of manufacturing completely different drives per each capacity value, it may be cheaper to produce HDD with large single fixed capacity and than to create submodels of it with reduced capacites by locking some surfaces in firmware.

All platters are mounted on a single spindle and are synchronously rotated with fixed speed. Once HDD is powered on, electrical motor spins up platter assembly to nominal speed and this rotation continues until HDD is powered down or goes into power-saving mode. This speed, which is called “rotational speed”, is measured in revolutions per minute (RPM) and makes significant effect on both sequential and random access performance. Typical PC or server 3.5" disk drive has rotational speed of 7200 RPM, which is a de-facto standard. Laptop 2.5" HDDs are usually slower — only 5400 RPM, but some models are as fast as their larger associates — 7200 RPM. Very fast drives also exist — for most demanding applications — which rotate at 10,000 RPM and even 15,000 RPM. Often, such drives are called “raptors”, name given after popular series of such disks — Western Digital Raptor. The problem of fast drives is that they have order of magnitude less capacity and because of their high cost. Currently, they are becoming nearly extinct because of introduction of SSDs, which are cheaper and even faster.

Read and write are performed by magnetic heads, one head per platter surface.

All heads are mounted on the very end of actuator arm.

Actuator arm may be rotated on demand by arbitrary angles.

This operation positions all heads simultaneously onto concentric tracks.

Positioning is relatively fast — it takes only couple of milliseconds to complete, which is achieved with accelerations of

hundreds of g, and is also very precise — modern disk drives have more than 100,000 concentric tracks per inch.

Actuator arm repositioning can be easily heard: it creates audible clicking sound during operation.

Each track is logically is divided by number of sectors where each sector stores 512 or 4096 bytes of useful information depending on model. Each sector is a basic unit of both read and write operations. Such configuration makes it possible to access whole platter surface: actuator arm selects concentric tracks and constant rotation of platter assembly guarantees that each of sectors is periodically passed under head.

For addressing purposes, virtual combination of vertically stacked tracks (one track per surface) is called cylinder. For example, if HDD has 5 platters each with 2 surfaces, than each cylinder consists of 10 vertically stacked tracks, one per surface. When you reposition actuator arm — you reposition it to some cylinder. This makes each of heads to be located above corresponding track of this cylinder.

Besides platter assembly, HDD is equipped with electronical controller. It acts like a glue between host and magnetic storage and is responsible for all sorts of logical operations:

-

it governs actuator arm repositioning

-

computes and verifies error-correcting code (ECC)

-

caches and buffers data

-

maps addresses of “bad” sectors into spare ones

-

reorders requests to achieve better performance (NCQ)

-

implements communication protocol: SATA or SAS

Sector size

Each track is divided into sectors. All sectors carry user data of fixed length, 512 or 4096 bytes. Important to note that sector is a minimal unit of data which may be read or written to. It is not possible to read or write only part of sector, but it is possible to request disk drive to read/write sequence of adjacent sectors. For example, prototype for blocking read function inside driver could be like this:

int hw_read(size_t IndexOfFirstSectorToRead, size_t NumberOfSectorsToRead, void* Buffer);User data is not the only part of sector. As displayed in image below, each sector carries additional bytes which are used internally by HDD. Intersector gap and sync marker areas help HDD to robustly synchronize to the beginning of next sector. ECC area stores error-correcting code for user data. ECC becomes more and more important with growing HDD capacities. Even with ECC, modern HDDs do not guarantee to be error-free. Typical HDD allows 1 error bit per 1015 bits on average (1 error per 125 TB). This figure is of little interest for surveillance video storage but may become a cover-your-ass problem for storing numerical financial data.

One important characterisitc of HDD that influences performance is sector size.

Sector size is always specified as number of user data bytes available per each sector.

Standard value is 512 bytes.

Such HDDs have been used for decades and are still mass produced.

512 byte block is also a default unit for most of unix commands dealing with files and filesystems, such as dd and du.

After introduction of HDDs with other sector sizes, it became necessary to distinguish HDDs by sector size.

Common way is to use term 512n which stands for “512 native”.

Also, “physical” is used interchangeably with “native”.

Thus, terms “512n”, “512 physical”, “512 native” all identify HDD with 512 byte sectors.

Intense rivalry for higher HDD capacity forced manufacturers to increase standard sector size from 512 bytes to larger value. The reason behind this decision is that system data occupies nearly constant size: intesector gap, sync marker and sector address are required only once per sector and do not depend on the amount of data stored in user data section. Overhead of system data becomes lower with increase in sector size, which adds a bit more capacity to HDD. So, new standard value for sector size emerged — 4096 bytes. Such sector size is called “Advanced Format” (AF) and is a relatively new advancement, since 2010. AF is implemented by most of HDDs with multi-terabyte capacites and is advertised as 4Kn (or 4096n).

Third option exists to provide backward compatability to operating systems which do not support AF: 4096 byte physical sector with 512 logical sectors. When using such drives, OS talks to HDD in terms of 512-byte logical sectors. But internally each 8 such logical sectors are grouped together and form single physical 4096 sector. Such drives are advertised as 512e (“512 emulation”). Modern operating systems are aware of the fact that logical and physical sector sizes may be different and always issue requests in units of physical sector sizes (4 KiB for 512e). This doesn’t create any performance penalties. But if some older OS, thinking that drive really has 512 byte sectors, makes write request in violation with 4 KiB alignment rule, then drive accepts this request but performs read-modify-write (transparently) because only part of physical 4 KiB sector is modified. No need to say that this severely cripples performance.

In linux, it is possible to find out information on sector sizes by looking into sysfs.

Replace sda with the name of device you want to know about.

This should be raw block device name and not a partition.

$ cat /sys/class/block/sda/queue/logical_block_size 512 $ cat /sys/class/block/sda/queue/physical_block_size 4096

It may be worth to note that some enterprise class drives have a bit larger sector size than canonical values: 512+8, 512+16, 4096+16, 4096+64 or 4096+128 bytes. Such extensions are part of SCSI protocol and are called DIF/DIX (Data Integrity Field/Data Integrity Extensions) or PI (Protection Information). Extra bytes are used to store checksums computed by software or by RAID controller rather by disk drive itself. Such approach allows to make verification of data integrity more robust. It verifies storage subsystem as a whole, including not only HDDs but also all intermediate electronics and buses.

Addressing

Next thing we need to know is sector addressing. Addressing scheme is very important for performance as it allows OS to optimize its requests, and that’s why addressing scheme is kept similar among all HDDs.

Very old HDDs were addressed by using vector of three numbers — (cylinder, head, sector) — called CHS for short. Single HDD had fixed number of cylinders, fixed number of heads, and fixed number of sectors per track. All these values were reported to BIOS during system initalization. If you wanted to access some sector, then you would send its three coordinates to disk drive. Cylinder value specified position where to move actuator arm to, head value activated corresponding head, and, after that, HDD waited until track with given track number passed under activated head while platter assembly was being constantly rotated.

Access to adjacent sectors belonging to single track is cheap, while access to sectors belonging to tracks of different cylinders is expensive. Filesystem make use of this fact to optimize allocation algorithms. In particular, they try to make files to occupy nearby sectors rather than far away sectors.

CHS scheme became obsolete, partly because using fixed number of sectors per track is not optimal in terms of HDD capacity. Outer sectors are longer compared to inner sectors (left image), but store the same amount of information. This inspired manufacturers to create technology which is called ZBR — zone bit recording. Idea of ZBR is to group cylinders by sector density. Number of sectors per track is fixed inside single group, but grows gradually when moving from inner group to outer group.

Downside of such approach is that addressing scheme becomes very complex.

OS needs to know number of cylinder groups and number of sectors per track for each such group.

Instead of exposing these internals to OS, manufacturers adopted simpler addressing scheme called LBA (logical block addressing).

When OS works with LBA enabled disk drives, it needs to specify only flat sector address in range ],

where

is total number of sectors.

HDD controller translates this flat address into geometrical coordinates: cylinder, cylinder group, track, sector, — and this translation algorithm is transparent to OS.

Though exact algorithm to map LBA to geometrical coordinates is not known to OS, mapping is somewhat predictable. This is done intentionally to allow filesystems to continue to employ their optimizations. In particular:

-

Access to nearby LBA addresses is cheaper than access to far away addresses. For example, it is better to access sectors

rather than

-

LBA addresses always start with outermost cylinder and end with innermost one. Zero LBA address is mapped to some sector in the outermost cylinder, while LBA address

is mapped to some sector in the innermost cylinder.

| Linear→ | 000 | 001 | 002 | … | 049 | 050 | 051 | 052 | … | 099 | 100 | 101 | 102 | … | 149 | … | 950 | 951 | 952 | … | 999 | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Cylinder |

0 |

0 |

0 |

… |

0 |

0 |

0 |

0 |

… |

0 |

1 |

1 |

1 |

… |

1 |

… |

9 |

9 |

9 |

… |

9 |

|||||

Head |

0 |

0 |

0 |

… |

0 |

1 |

1 |

1 |

… |

1 |

0 |

0 |

0 |

… |

0 |

… |

1 |

1 |

1 |

… |

1 |

|||||

Sector |

0 |

1 |

2 |

… |

49 |

0 |

1 |

2 |

… |

49 |

0 |

0 |

1 |

… |

49 |

… |

0 |

1 |

2 |

… |

49 |

Transition to ZBR created serious impact on HDD performance. Because disk drive spins at fixed rate, head reads more sectors per second when positioned at outward cylinder compared to when it is positioned at inward cylinder in the same amount of time. Combined with rule #2 from above, this leads to important characteriscitc of HDDs: working with low LBA addresses is faster than working with high addresses.

All modern HDDs use LBA atop of ZBR.

It is possible occasionly to see CHS values, for example in BIOS or in hdparm output.

But keep in mind that these values are not true values: this is emulation provided only for backward compatability.

It has nothing to do with actual internal geometry and provides access to only limited subset of HDD sectors.

Performance

Once completed with basics of HDD operation, we can move on to measuring performance. Each IO operation is a read or a write of a sequence of sectors specified by LBA address of first sector. Mechanical nature of HDD makes IO operation to be a multistep process:

-

Random access. Obvious enough that in order to start accessing sectors, head must be positioned to the first requested sector. When request is received, actuator arm position relative to center of platter assembly is in some random state in general case. The same is true about instantaneous angular position of platter assembly. So, HDD performs random access, which in turn consists of two steps:

-

Seek. Controller sends command to move actuator arm to a position so that head is above track where first requested sector is located. Actuator arm is moved inwards or outwards depending on its current position.

-

Rotational delay. Because platters are being constantly rotated, target sector will eventually pass under head. No special action is required — only idle waiting. This time may be as long as it takes to make one full revolution, but is only half revolution on average.

-

-

Now HDD reads or writes all requested sectors one by one, while platter assembly continues to be rotated. Situation is possible when some of requested sectors are located in adjacent cylinder. HDD has to reposition actuator arm once again (track-to-track seek) in this case.

Each of these steps takes considerable amount of total time and cannot be ignored in general. Let’s see at how these steps affect performance of different access patterns.

Sequential access

Sequential access pattern is natural to HDD.

It arises when we want to read or write very large number of adjacent sectors starting with

given address: .

here is order of hundreds and thousands.

Becase number of sectors to access is huge, positioning to the first sector () takes

small time compared to read/write itself.

So, we assume that head is already positioned to it.

Taking into consideration LBA to CHS mapping, now the only thing we need to do is to wait

some time while platter assembly is being rotated: all required sectors will be read/written

during this rotation.

Faster rotation speed and higher density of sectors per track make sequential access faster.

The only bad thing that may happen is when subsequent sector has different cylinder coordinate (e.g. CHS transition (0,1,49)→(1,0,0)). Actuator arm has to be repositioned in this case. Repositioning is performed to adjacent cylinder (to the inside of the platter), and time required to perform such move is called track-to-track seek time. Typical value of track-to-track seek time is only couple of milliseconds and is often specified in HDD’s datasheet. Anyway, track-to-track seeks do not contribute very much to sequential access performance and may be ignored.

Good thing about sequential access is that almost all time goes direcly into reading or writing sectors. Thus, throughput efficiency is at its maximum. This is the optimum mode of operation for HDD by design. Manufacturers usually provide sequential access speed as sustained data transfer rate in datasheets. Typical value for modern 3.5'' 7200 RPM disk drive is 150..200 MB/sec. But you must be aware of two common marketing tricks leading to this figure. First one is well-known: rate is specified in decimal megabytes per second rather than in binary ones. Difference between them is about 5%: not so great, but and also is not too small to ignore if you’re building high load file storage. Second trick is subtle one. This transfer rate is the maximal rate and was measured by manufacturer at lowest address: remember that largest number of sectors is in the outermost cylinder because of ZBR. Average rate is much smaller, you will need to subtract 20%-25% of advertised rate to get it. Minimal transfer rate, which is achieved in innermost cylinder, is even worth that that — down 40%.

In general, sequential speed depends on following disk characteristics:

-

Rotational speed. Disk drive with 7200 RPM is faster than 5400 RPM one.

-

Sector count per single track. This in turn depends on:

-

HDD model introduction date. Newer generations of HDD have higher density of sectors, both longitudal (more sectors per track) and radial (more tracks per platter surface). Recently introduced HDD model will be faster than model introduced five years ago.

-

LBA address. Working with low addresses is faster than working with high addresses.

-

-

Track-to-track seek time. HDD with smaller value is a bit faster.

Random access

While hard disk drives are good at sequential access, they really suck at random access at the same time. Random access arises in situations when we need to make a lot of small (couple of sectors) reads or writes scattered all over the address space.

Time it takes to read/write sectors is neglible because number of sectors involved is small by the definition of random access. The true problem is that to read/write this small number of sectors, head must be properly positioned to them. This repositioning (called access time) takes all the time, and that’s why throughput (MB/s) drops down greatly compared to sequential access.

Let’s look at what happens during random access. HDD carries out two operations in sequence:

-

First, it moves actuator arm to target track. Time required to perform such move is called seek time.

-

Next, it waits until platter is being rotated so that target sector is just beneath the head. Corresponding metric is called rotational latency.

Seek time depends on number of tracks between current track and destination track. The closer they are together — the faster is the seek. For example, worst case is moving from the innermost track to the outermost track or vice versa. Conversely, seek to adjacent track is relatively cheap. In practice, seek time is not necesseraly linearly dependent on track count between source and destination because HDDs move actuator arm with some acceleration. In order to compare disk drives, three different characteristics are in use:

-

Track-to-track seek time (~0.2..2 ms). This is time it takes to move from one track to its neighbour and presents the lowest bound for all possible seek times. This characteristic is not important for random access but plays some role in sequential access as was explained in previous section.

-

Full stroke seek time (~15..25 ms). Time it takes to move from outermost track to innermost one or vice versa. This is the worst possible seek time.

-

Average seek time (~4..6 ms). This time is computed as average time it takes to move actuator arm across 1/3 of all tracks. This number (1/3) is derived from the fact that if we have two uniformly distributed indpenedent random variables (source and destination tracks respectively), then average difference between them is 1/3 of their range.

Rotational latency is less predictable but is strictly bounded. After seek is done, platter angular position is random relatively to target sector, and HDD needs to wait while platter is being rotated. Best case is if target sector turned out to be just exactly under head after seek. Worst case is if target sector has just passed away from head, and wait for full revolution is required until it will pass again under the head. It can be said that wait for half rotation is done on average. Thus, rotational latency depends solely on disk rotational speed (RPM). Table below lists well known rotational speeds along with translations of RPM values into how long it takes to make single revolution and how many such revolutions are performed per second.

| RPM | Half revolution | Full revolution | ||

|---|---|---|---|---|

|

ms |

IOPS |

ms |

IOPS |

15,000 |

2.0 |

500 |

4.0 |

250 |

10,000 |

3.0 |

333 |

6.0 |

166 |

7,200 |

4.2 |

240 |

8.3 |

120 |

5,900 |

5.1 |

196 |

10.2 |

98 |

5,400 |

5.6 |

180 |

11.1 |

90 |

4,200 |

7.1 |

140 |

14.3 |

70 |

For example, half revolution takes 4.2 ms for 7200 RPM disk drive. If you are querying database for random records, you can’t get performance of more than 240 requests per second on average. This value is limited by rotational speed even if records are packed close together (seek is cheap in this case). If you’ve got better performance then this means that records come out mostly from page cache rather than from HDD, and you will get into trouble when dataset becomes large enough so that it doesn’t fit in page cache anymore.

Seek time and rotational latency are rivals in the field of who contributes more to the access time. Graph below demonstrates dependence between LBA address delta and range of possible access times. HDD is assumed to be 7200 RPM and to execute full stroke seek in 15 ms. Light green line represents lowest possible access time, which is the result of seek time only. Mid-green and dark green lines are cases when additional wait was required for half and full revolutions respectively. For example, if we send requests to 1 TB drive, previous request was at 100 GB position and new request is at 500 GB, then delta between them is 40%. Thus, according to the graph below, access time is bounded between 10..18 ms. Repetition of this test will produce different values from 10 to 18 ms, but average access time will be 14 ms.

It can be observed that if address delta is relatively small, then rotational latency is definitely the major contributor: sometimes it is zero, but sometimes it is full rotation, while seek time is always negligible. Conversely, if address delta is large, then seek time takes all the credit. Finally, if address delta is not too big not too small, then there is no definite winner.

To realize slowness of random access, let’s compare it to CPU speed.

If we are given a 3 GHz CPU, then we may assume that it performs basic operations (like additions)

per second in sequence, and this is the worst case — without taking into account out-of-order execution and other

hardware optimizations.

While disk drive serves single random access read of 5 ms, CPU manages to complete

operations.

HDD random access is more than seven orders of magnitude slower than CPU arithmetic unit.

Hybrid access

Some applications have a concept of data chunk. They read/write data in chunks of fixed size, which can be configured. Choice of chunk size depends on what is more important: throughput or response time. You can’t get both of them. If throughput is more important than response time, then chunk size is chosen to be very large, so that access pattern becomes sequential. This is natural and most effective mode for HDDs. If response time is more important than throughput, then chunk size is chosen to be very small — not more than couple of dozens of sectors. Access pattern becomes almost random in this case. As explained above, HDDs are very poor at performing random access: its speed (MB/s) may fall down orders of magnitude compared to throughput-oriented chunk size.

Now imagine web application that streams video files to multiple clients simultaneously. Of course, we would like to make chunk size as large as possible (tens and hundreds of megabytes). Unfortunetely, such large chunk size has its own drawbacks. First of all, we need in-memory buffer of corresponding size for each separate client, which may be expensive. Second, and more important, is that clients may be blocked for long periods of time: if, while reading data for client A, new client B makes request, then B is stalled until read for A is complete. Conversely, using very small chunk size leads to perfect fairness and small memory footprint but uses HDDs extremely ineffectively (pure random access).

So, what we need to do, is to find a balance between response time and effective throughput. For example, we may want to choose such chunk size that values of both summands in general formula are equal:

If HDD has average sustained speed of 100 MB/s and average access time is 5 ms, then chunk size is

chosen to be .

Single operation will take 10 ms on average with such chunk size, half of which is spent on

random access and another half is spent on read/write itself.

Throughput is also not so bad:

.

Comparison

In the image below, throughput efficiency of three different access patterns is compared. Time flows left to right; each separate long bar reprerensts single request. Blue color represents “useful” periods of time, when data is actually read or written. Red color means periods of time wasted on seeks and rotational delays.

Sequential access demonstrates nearly perfect throughput, above 90%. Almost all time is spent in reading or writing sectors excluding initial random access and rare track-to-track seeks. Random access is the opposite: all time is burnt in disk random accesses, and only very small fraction of time is spent in working directly with data. Throughput efficiency is just above zero. Hybrid access displays 50% throughput efficiency by design: a balance between throughput and IOPS.

As a rule of thumb, use HDD for mostly sequential access and do not use it for random access. The only one exception is when response time of tens of milliseconds is acceptable and you are sure that system would never be required to provide more than one hundred IOPS.

Experiments

Effect of LBA address on sequential access performance

This test demonstrates effect of LBA address on sequential performance. Test was performed by reading 128 MiB chunks of data at different addresses: first probe was made at address 0, next — at 1% of disk capacity, and so on with last probe made at 100% of capacity. Horizontal axis is the address value in gigabytes, vertical axis is the achieved speed.

You can clearly see that speed drops down more than twice at highest address (which is mapped to innermost HDD track). Sometimes it may be even worth to repartition HDD with regard to above effect:

-

If you are going to work with data which will be often accessed sequentially, then create dedicated partition for it in the very beginning of HDD. Examples of such data include: web server access logs, database files and streaming video.

-

If you have data which will be accessed rarely (access pattern doesn’t matter), then create dedicated partition for it in the very end of HDD so that this data won’t use valuable low addresses.

Random access response time distribution

Next graph is dedicated to random access timings. Each point corresponds to one of 2000 random reads performed, each read is one sector long (512 bytes) and is properly aligned. Horizontal axis is LBA address delta between source and destination positions, vertical axis is operation response time.

Points lying at the lower bound of the cloud are the best cases. They correspond to situations when after actuator arm has been positioned at proper track, required sector turned out to be just beneath the head. That is, no additional wait for platter rotation is required. Note that seek time becomes longer with larger LBA address delta. Seek time corresponding to max delta is fullstroke seek time (21 ms).

Conversely, upper bound points correspond to the worst possible situation. After arm has been positioned to target track, required sector has just moved past head, and drive has to wait for one full platter rotation until required sector passes under head once again. According to above graph, rotational delay is spread in range [0, 11] milliseconds and is independent of deltas. Knowledge of full rotation time (11 ms) immediately leads us to the conclusion that this is 5400 RPM HDD that was tested.

Important to remember that short delta random accesses are limited in performance by rotational speed while large delta random acceses are limited by seek time.

Chunk size

This tests demonstrates influence of chunk size on two types of throughput: MB/s and IOPS.

If HDD was an ideal device, then access time would be zero and throughput (MB/s) would be constant (dashed line) disregarding chunk size. Unfortunately, access time is well above zero due to mechanical nature of HDD, which results in throughput being much worse on average. Effective throughput converges to optimum only when chunk size is very large, starting with 8 MiB for specimen used in this test. HDD is working in purely sequential access mode and achieves it top performance (MB/s) in this region. Almost all time spent inside HDD goes directly into reading or writing sectors.

Left part of graph displays the opposite. Chunk size is very small, and HDD is working in purely random access mode. As such, HDD manages to complete a lot of independent operations per second, but cumulative write speed (MB/s) falls down dramatically compared to purely sequential access. For example, we get nearly top IOPS with 16 KiB chunks but write speed is only ~2 MB/s, which is 1/15 of top possible speed. Time spent on writing sectors is negligible in this case — most of time is actually “burnt” on seeks and rotational delays. It is easy to encounter such situation in real world during copying a directory with a lot of small files in it. When this is happenning, you may observe your HDD working like mad judging by blinking LEDs, you may hear hellish sounds from inside (seeks), but copy speed is just above zero MB/s.

Point of intersection corresonds to kind of golden mean, where both MB/s and IOPS are neither too bad nor too good.

Solid-state drive (SSD)

SSDs are slowly but steady taking the place of HDDs as a mainstream storage device. Free of mechanical parts, SSD clears the gap between sequential and random types of access: both are served equally fast. Unfortunately, cost per gigabyte is still about eight times higher for SSD compared to HDD even after more than decade of intensive development. This makes SSDs and HDDs to organically coexist in data storage device market, with each one being ideally suitable for specific set of applications.

Theory of operation

Floating gate transistor

Solid state drives, USB sticks and all common types of memory cards are all based on flash memory. Basic unit of flash memory is a floating gate IGFET transistor. Idea behind it is to add additional layer, which is called “floating gate” (FG), to otherwise ordinal IGFET transistor. Floating gate is surrounded by insulating layers from both sides. Such structure allows to trap excessive electrons inside FG. Under normal circumstances, neither they can escape FG nor other electrons can penetrate insulator layer and get into FG. Hence the ability of transistor to store information: it is said that transistor stores binary “0” or “1” depending on whether its FG is charged with additional electrons or not.

Reading transistor state is performed by checking whether source-to-drain path conducts electricity or not. If gate is unconnected to power source, then this path is definitely unconductive, disregarding whether FG is charged or not: one of p-n junctions is in reverse and won’t allow current to flow through it.

Things become interesting when gate is connected to power source ().

If FG is uncharged, then control gate’s electrical field attracts some of electrons to the very top of p-substrate like a magnet.

These electrons can’t move up because they do not have enough energy to pass insulator layer, so they form narrow region

which is conductive and exists until power is removed from gate.

Current is free to flow along this region in both directions by means of these electrons, thus shortening source-to-drain path.

But if FG is charged, then FG charge partially shields p-substrate from gate`s electrical field.

Remaining electrical field is not enough to attract substantial number of electrons, and source-to-drain path remains unconductive.

It is noteworthy to mention that if gate voltage is raised to even higher

level (), then FG won’t be able to provide enough

shielding anymore, and source-to-drain path will become conductive unconditionally.

Such operation is of no use for standalone transistor, but is essential when

transistors are arranged into series, as would be explained later.

To change the charge of FG, someone needs to apply high voltage (tens of volts) between gate and other terminals. It forces electrons to pass lower insulator layer. Depending on direction of voltage applied, either electrons will move out of FG to the p-substrate, or vice versa, move from p-substrate into the FG. Top insulator layer is made of material which is inpenetrable even at high voltages. Adding electrons to FG is called “programming” in terms of flash memory and removing electrons from FG is called “erasing”. Unfortunately, each program or erase operation worsens quality of insulator layer. Some of electrons get trapped in insulator layer rather than in FG, and there are no simple controlled means of removing them out of there. Electrons trapped in insulator layer create residual charge and also facilitate leakage of electrons to/from FG. After number of program/erase cycles, insulator layer “wears off” and transistor is unsuitable for data storage anymore. Manufacturers specify endurance of flash memory as guaranteed number of program/erase cycles before failure (P/E cycles).

Modern flash memory is able to store more than single bit per transistor (which are called cells in terms of flash memory). This is achieved by distinguising between more than two levels of charge, for example, by testing source-to-drain conductivity with different gate voltages. Currently, following types are produced:

-

SLC, “single-level cell” — senses 2 levels of charge, stores 1 bit

-

MLC, “multi-level cell” — senses 4 levels of charge, stores 2 bits. Technically, “multi-” may mean any number of levels larger than two, but in practice it almost always refers to four levels.

-

TLC, “triple-level cell” — senses 8 levels of charge, stores 3 bits

Storing more bits per cell has obvious benefit of having higher device capacity. Tradeoff is that when more bits per cell are stored, things become complicated. It is easy to implement read/program operations with only two levels of charge: every charge that is higher than predefined threshold value is treated as binary 1 and every charge below this threshold value is binary 0. Ability to work with three levels or more requires intermediate charges to fit exactly into specified windows.

This makes all operations slower. For example, now programming has to be done in small steps in order not to miss desired window:

-

Add some small charge to FG

-

Verify its actual level

-

Go to first step if it has not achieved required level yet

Storing more levels per transistor also severely reduces number of P/E cycles: even slowest leakage of electrons through insulator layer would move charge out of its window and be sensed as incorrect value. Typical SLC endures up to 100,000 P/E cycles, MLC — 3,000, and TLC is even worse than that — only 1,000. To alleviate this problem, flash memory contains extra cells to store ECC, but this helps only to some extent.

Block

Cells are organized into two-dimensional arrays called blocks. All cells share p-substrate, that’s why only three leads per cell are shown. Each row of cells forms single page, which has somewhat similar role as sector in HDD: it is a basic unit of reading and programming in most cases. Typical page consists of 4K-32K cells plus some cells to store per-page ECC (not shown). Typical block has 64-128 such pages.

Block supports the same three operations as standalone cell: read, program and erase. But now, because cells are coupled into series, it becomes even trickier to perform them.

- Read

-

Both read and program operations act on single pages. Selection of particular page is done by connecting word lines of all other pages to

. This opens all cells in them no matter whether their floating gates are charged or not. As such, circuitry turns into as if only single page was present.

Next step depends on operation. If read is done, then selected page’s word line is connected to

(

) and source line is grounded. Now data for reading is sensed via bit lines: path between source line and each of bit lines is conductive if and only if corresponding cell in selected page doesn’t hold charge. If cell is MLC/TLC, than either different levels of current are sensed, or reading is performed in number of steps with different

values. It takes about 25 μs to read single page in SLC, and couple of times longer in MLC/TLC.

If we put aside floating gates for a moment, then it can be observed that such configuration resembles multi-input NAND gate:

. Word lines act as inputs and each separate bit line is an output. Bit line is tied to ground ("0") if and only if all gates are "1". Similarity to NAND gate gave name to such type of memory organization — NAND flash. Another type of flash memory, which won’t be described here, is NOR flash: it is faster for reading and provides random access to each separate cell. But because its higher cost, it is mainly used to store and execute firmware code.

- Program

-

Programming is also performed by first selecting page by applying

to all other pages. Next, bit lines corresponding to bits in page we want to program are grounded, and word line of selected page is pulled to

(

).

voltage is high enough to force electrons to overcome insulator layer and move into floating gates. Floating gates in other cells are nearly unaffected because voltage difference is only

, which is too low. Program operation is much slower than read operation: about 200 μs for SLC and, as with read, couple of times longer for MLC/TLC. But anyway, it is order of magnitude faster than HDD’s access time.

It is reasonable to ask: why unit of programming is whole page and not single cell? Problem is that because program operation uses high voltage, charges of nearby cells are disturbed. If multiple program operations were allowed to be issued to single page (to different cells), than this would potentially toggle a glitch in cells we didn’t want to be programmed. Some SLC flash allows partial programming, that is, it is possible to first program cells 0, 5 and then 2, 3, 6 in a single page. But even such, number of partial programmings beore erasure is strictly limited. The only reasonable case for using partial programming is to split large physical page into number of smaller logical pages, thus allowing single program operation to be issued once per logical page.

- Erase

-

The key difference of erase is that it is a whole block operation: all cells of one or more blocks are erased simultaneously. Such limitation simplifies memory production and also removes the necessity to care about disturbing charge of nearby cells. Inability to erase single pages presents second major drawback of flash memory (wearing is being the first one). Erase is performed very rough: all word lines are grounded and high voltage source is connected to body (substrate), which is shared by all cells. This forces electrons to move out from FG into p-substrate, thus zeroing charge of all floating gates.

Erase is even slower than programming: 1500 μs for SLC and, once again, couple of times longer for MLC/TLC. But in practice it is not a big problem because, under normal circumstances, erases are performed in background. If, for some reason, erase of only subset of pages needs to be done, then we have to perform read-modify-write sequence:

-

first, all pages, which must be preserved, are read into temporary buffer one by one

-

then block is erased as a whole

-

then buffered pages are programmed back one by one

Not only this is extremely slow, but also wears cells prematurely. In order to avoid such situations, SSD employ smart algorithms described in next section.

-

As a summary, here is lifecycle of flash block consisting of four pages each with 8 SLC cells. Note that once page is programmed, it remains in such state until erase is performed, which acts on whole block. There is no overwrite operation.

And here are typical performance figures of NAND flash. Most of produced server grade flash memory is of MLC type. SSDs intended to be used in workstations may be MLC or TLC.

| Read page | Program page | Erase block | P/E cycles | |

|---|---|---|---|---|

SLC |

25 μs |

200 μs |

1500 μs |

100,000 |

MLC |

50 μs |

600 μs |

3000 μs |

3,000 - 10,000 |

TLC |

75 μs |

900 μs |

4500 μs |

1,000 - 5,000 |

Controller

Like HDDs, SSDs carry controller, which acts as a bridge between memory chips and outer interface. But flash memory specifics — erasures at block level and wearing — make controller to implement complex algorithms to overcome these limitations. As a result, performance and life span of SSD depend on controller to such great extent that controller model is typically specified in SSD datasheet.

Let’s start with the following situation. Suppose that your have some sector that is updated very often. For example, it backs database row that stores current ZWR/USD exchange rate. If sectors had one-to-one mapping to pages of flash memory as in HDD, that would require read-modify-write sequence at block level with each edit. That would wear out frequently written pages very soon (only after couple of thousands of writes), while long tale of rarely used pages would remain in nearly virgin state. Such approach is also undesirable because performance of read-modify-write is very poor — about that of HDD’s access time.

To solve these problems, logical sectors do not have fixed mapping to flash memory pages.

Instead, there is additional level of addressing called flash translation layer (FTL),

maintained by controller.

Controller stores table which maps logical sectors to (chip, block, page) tuples,

and this table is updated during SSD lifetime.

When write() request is served, instead of performing read-modify-write sequence,

controller searches for free page, writes data into it and updates table entry for written sector.

If you make 1000 writes to the same sector, then 1000 different pages will be occupied by data,

with table entry pointing to the page with latest version.

Example of FTL mapping is displayed below. There are 16 logical sectors: some of them are valid and are mapped to physical pages, while others are not currently valid. Pages not referenced by FTL are either free pages or contain old versions of sectors.

Existance of FTL creates in turn another problem — dealing with pages which store deprecated versions. To reclaim them, controller performs garbage collecting (GC). Normally, it is run in background, when there are no pending requests from OS to serve. If some block is full and all its pages contain deprecated versions, block may be safely erased and all its pages may be added to the pool of free pages for further use. It also makes sense to erase block if it contains small fraction of live pages — by copying them into free pages and updating FTL table accordingly.

Obviously enough, FTL is effective only if there are plenty of free pages. Without having free pages, attempt to write to some sector will cause blocking read-modify-write sequence, which is extremely bad from performance and longevity points of view. But the problem is that SSD is always full by default. All those “free space left” metrics are part of filesystems and are of no knowledge to SSD. From SSD persepective, once data is written to some sector, this sector is “live” forever, even if it doesn’t hold valid data anymore from filesystem’s point of view. Two special features are implemented in modern SSDs to resolve this problem.

First feature is called provisioning area. SSDs come with a bit more capacity than actually is specified in datasheets (typically +5..10%). Provisioning area size is not counted into total capacity of SSD. Main purpose of adding provisioning area is to be sure that SSD will never be 100% full internally, thus nearly eliminating read-modify-write. The only one exception is when rate of writes is so high that background GC is not able to keep pace with it. Second purpose of provisioning area is to ensure that spare blocks exist as a replacement to be used for weared off blocks.

Second feature in use is a TRIM command (word is not acronym but an ATA command name), also known as erase and discard. If filesystem supports it, then it may send TRIM along with range of sectors to notify SSD that data in these sectors is not required anymore. On receiving such request, controller will remove entries from FTL table and mark pages corresponding to specified sectors as ready for garbage collecting. After that is done, reading trimmed sectors without writing to them first will return unpredictable data (for security reasons, controller will usually return zeroes without accessing any pages at all).

Image below demonstrates tecnhniques described above:

-

Initally, all pages of block

#0are in erased state. No data is stored in sectors0x111111,0x222222,0x333333— there are null pointers in FTL table for them. -

Next, some data was written into sectors

0x111111and0x222222. Controller found that pages in block#0are free and used them to store this data. After write was completed, it also updated FTL table to point to these pages. -

Next, sector

0x333333was written to and also sectors0x111111and0x222222were overwritten. Controller wrote new versions of sectors in free pages and updated table. Pages containing old versions were marked as ready for GC. -

Next, filesystem decided that sector

0x222222is not required anymore and sent TRIM command. Controller removed entry for this sector and marked page as ready for GC. -

Finally, background GC was performed. Valid versions were moved to empty block

#1, table was updated appropriately and block#0was erased.

Besides garbage collecting, controller is also responsible for wear leveling. Its ultimate goal is to distribute erases among all blocks as evenly as possible. Controller stores set of metrics for each block: number of times each block was erased and last erase timestamp. Not only are these metrics used to select where to direct next write request to, but controller also performs background wear leveling. Idea behind it is that if some block is close to its P/E limit, then writes to this block should be avoided, but there is no problem to use it for reading. So, controller searches for pages with “stable” data in them: pages which were last written long time ago and have not been modified since that time (e.g. pages which back OS installation or multimedia collection). Next, pages of highly weared block and “stable” pages are exchanged. This requires erase on nearly dead block one more time, but is beneficial in the long run. It reduces chances that highly weared block will be overwritten soon, hence prolonging its life.

SSD assembly